Fisheye warping for spherical mirror fulldome projectionWritten by Paul BourkeJuly 2012 Swedish translation by Marie Stefanova, August 2017

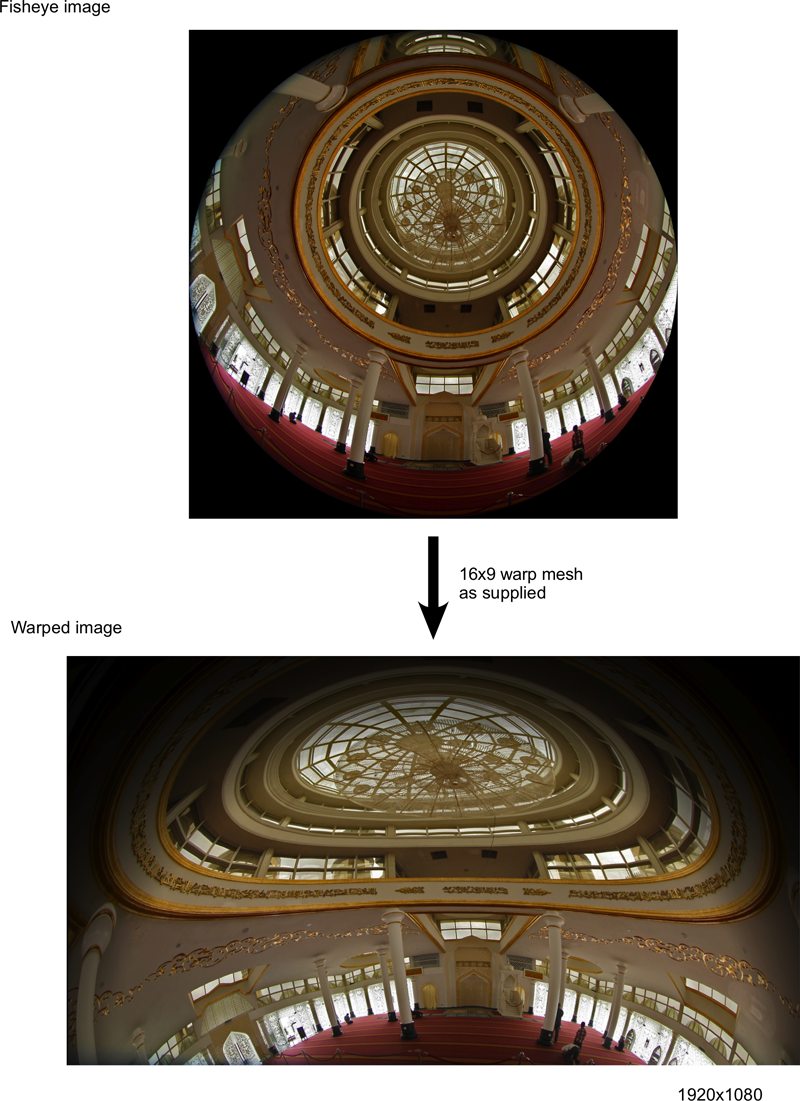

The following is an attempt at a technical description of how fisheye images are warped for use in a dome using the spherical mirror projection technique. There are currently a number of packages that support this warping, they include the authors own warpplayer, World Wide Telescope, Software Bisque, a patch for Quartz Composer, Blender, and Unity3D implementations ... and others. Before introducing the warping a short discussion of how fisheye and warped fisheye based projections are generally configured in a planetarium (or other domes such as the iDome). Depending on whether the computer incorporates a display (laptops or the iMac) or whether it is a computer with a graphics card and two graphics pipes, the operator does not (in general) use the dome to navigate but rather a personal flat display. The two most common modes of operation then are as follows:

It is the authors advice that the fisheye should be rendered to a texture of sufficient resolution (see later) and then presented/warped onto both the operator and projected display. Whether the operators display is fisheye and the projector display is warped, or both warped is a matter for the software developers based upon ease of implementation and/or performance, both are acceptable. Note that data projectors have the ability of mirror the image vertically or horizontally, thus this need not be the concern of the software. However in order to facilitate compatibility with other software, horizontal mirroring to the projector display may be helpful. In any case the image on the operator display must not be mirrored. This discussion will start from the position of having a fisheye image, the techniques for creating a fisheye using current real time graphics APIs (eg: OpenGL and DirectX) is a separate discussion. They generally involve either multipass rendering of cubic faces (environment maps) that are then assembled into a fisheye, or involve a vertex shader. Some discussion of this is given here for Unity3D and here for Blender. The motivation for this arose from the developers of Stellarium and NightShade, two astronomy visualisation packages that while they have included patchy support for spherical mirror, it was implemented in a different fashion. The proposed method takes the fisheye image, generally it would be rendered to an off-screen texture, this texture is then applied to a mesh which implements the desired warping. There are some very important consequences to this:

The "magic" obviously occurs in the details of the mesh file. The author will include some default mesh files currently in common usage. However ideally a mesh file needs to be generated with a knowledge of the projector/mirror/dome geometry as well as the optics of some of those components. In the current version of Stellarium and Nightshade, the geometry and optical specification of the projection system is embedded into the code, the user enters a number of parameters until the image looks correct on the dome. The author maintains the best way to do this is to have an external application that creates warp mesh files (for those cases where the sample mesh files are inadequate), this way all applications can share one warping description. It should be noted that the current parameter set in Stellarium and Nightshade is inadequate on two fronts: there are parameters relevant to the projection optics that are not covered; and the parameters used are somewhat arbitrary in the sense that they do not follow standard methods for describing projector optics. At the moment there are at least three options for creating precise warp mesh files, one is based upon Blender, and one is the authors own meshmapper that is supplied with the warpplayer software. Below are some "standard" mesh files which have been used up to now. Noting that having standard warp mesh files means the physical geometry of the projector and mirror needs to be adjusted, whereas the custom mesh file calibration approach means that a mesh file can be created that targets a particular geometric/optical arrangement. In general standard mesh files are often satisfactory for inflatable domes whereas fixed domes warrant a better result.

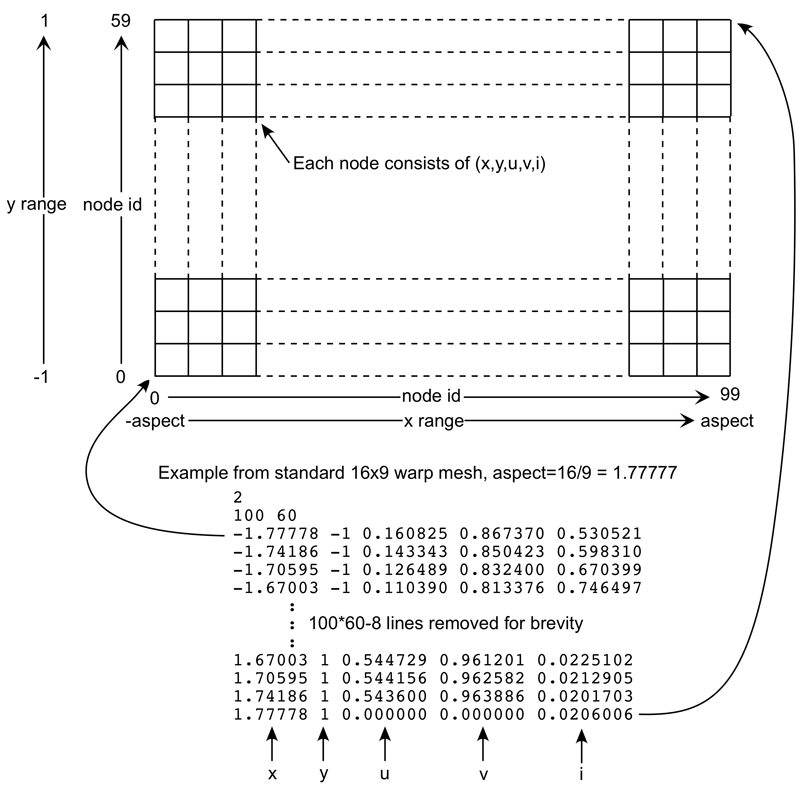

Please note that spherical mirror projection only makes sense for data projection and then when the projector is operated in fullscreen mode. The form of the mesh is not a function of the projector resolution but rather of the aspect ratio. This explains why there are only three standard warp files listed above, they correspond to the three most prevalent data projector aspect ratios. An aspect of 5x4 is not included as those are relatively unusual today and not ideal the spherical mirror projection. If the reader downloads one of the warp mesh files above they will be observed to be plain ASCII text files. The first line indicates the input image type, which is "2" for fisheye images ... remember this basic technique can be used for input image projections other that fisheye, for example standard perspective files, spherical (equirectangular) projections, cylindrical, etc. But in the case of a planetarium and warping a fisheye image the first line is always "2", this can optionally be used as test that a chosen warp file is appropriate. The second line contains two numbers indicating the dimensions of the 2D mesh, the first number is the number of nodes horizontally (Nx) and the second the number of nodes vertically (Ny). This and other aspects are illustrated in the next figure. The subsequent lines of the file each contain 5 numbers they are:

Note that while the (x,y) positions of the nodes here form a regular grid, they need not. Sometimes it is easier to implement the warp with a regular (x,y) grid and varying (u,v), sometimes a variable (x,y) arrangement is preferable, even though it is still topologically a grid.

There are only two parameters the user needs to specify, they are:

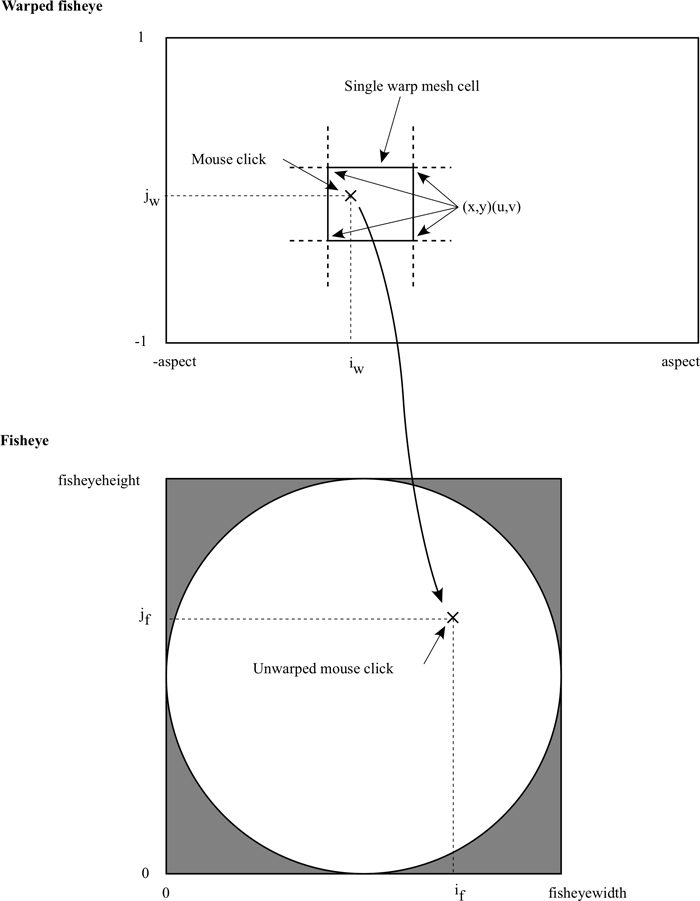

Mouse selection

Mouse clicking on a warped fisheye needs some special handling. While there are a number of different ways object selection can be handled in real time APIs, it is generally the case that they require the pixel position before warping. So here it will assumed the application can already handle mouse click and object selection in fisheye space, the following will then describe how to derive the pixel position in fisheye given a pixel position in warped fisheye.

Given the convention in the above figure the procedure is as follows.

|