Edge blending using commodity projectorsWritten by Paul BourkeAssistance by Roberto Calati, and panoramic images by Peter Murphy March 2004

Implementation for Quartz Composer contributed by Matthias Oostrik:

seb.zip. Introduction

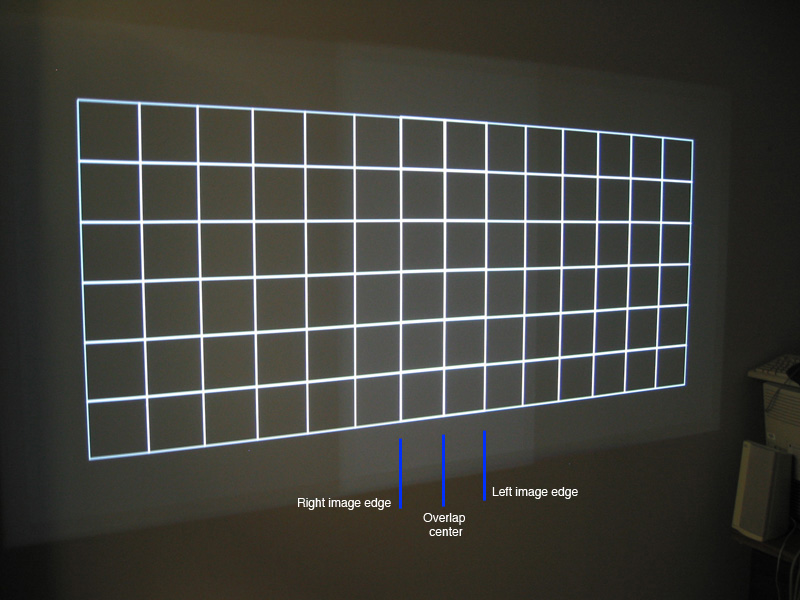

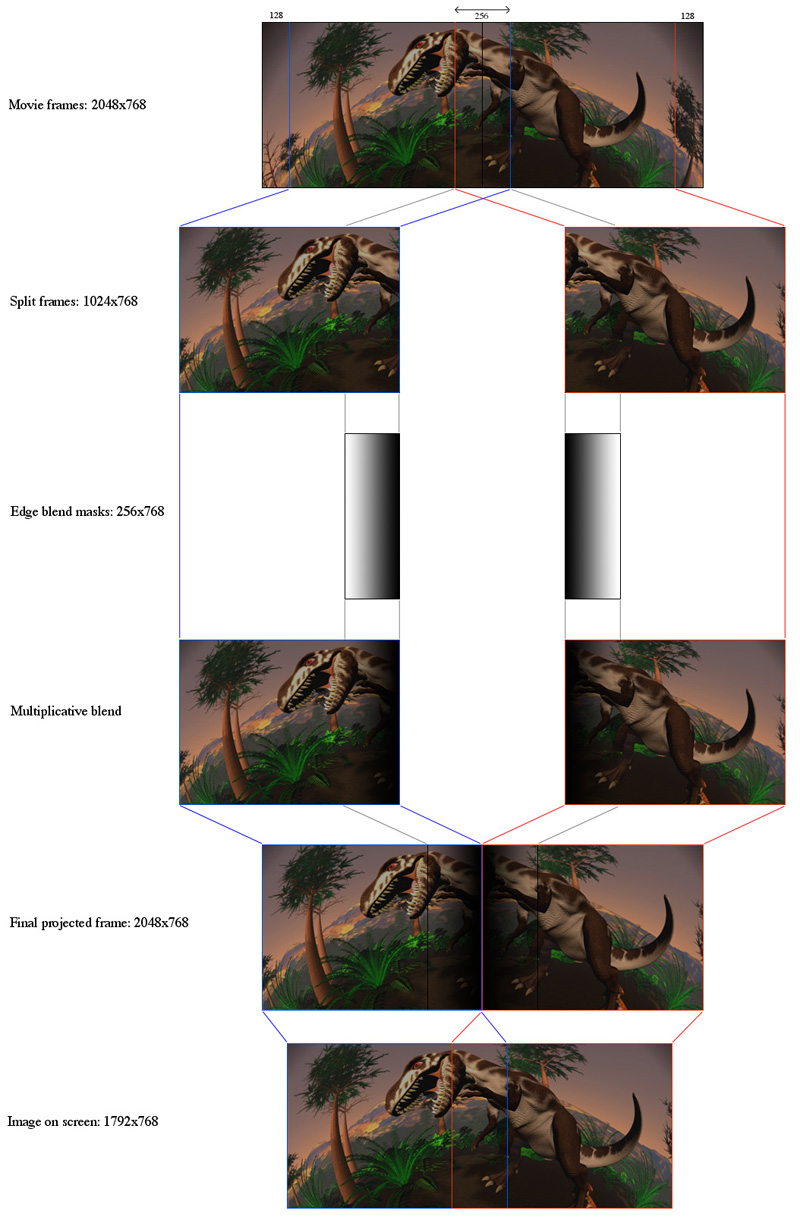

There are a number of applications which benefit from high resolution displays, for example, immersive virtual reality and scientific visualisation. Unfortunately projector technology is currently limited to around the XGA (1024x768) or SXGA (1280x1024) pixel resolution and at the time of writing the later are still rather expensive. The approach that can be taken then is to use low cast commodity projectors and tile a number of them together, each driven by either a different computer or a different graphics pipe on the same computer. The first thing one discovers with commodity projectors is that, unlike the more expensive models, they don't have inbuilt support for tiled displays. In addition their optics is such that it isn't possible to reliably align multiple projectors in a pixel perfect way (or even close). With the tiling not being pixel perfect one ends up with either a gap between the images or a double bright seam, both are quite objectionable especially so for content where the camera is panning or objects are moving across the seam. The solution is to overlap the two images by a significant amount and modify the pixels in the overlap region so as to make the overlap as invisible as possible. The reason why this works is that now any slight projector misalignment or lens aberration only reveals itself as a slight blurring of the image and not as a sharp seam or gap. Overlap

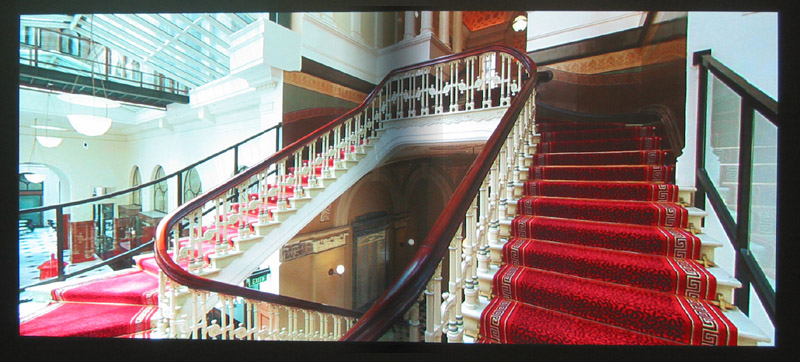

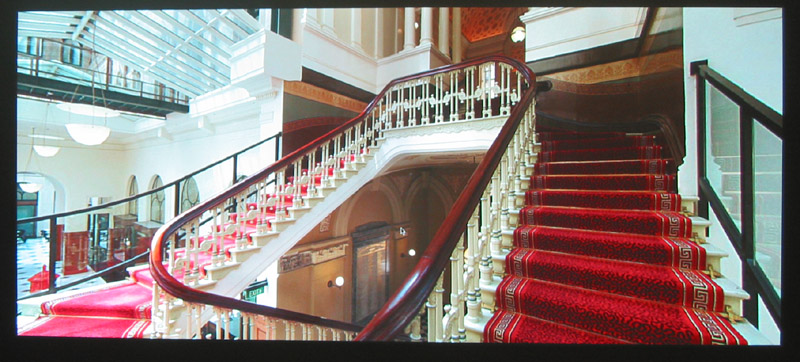

The first part in the process then is to overlap the images, in this discussion a panoramic image of the interior of a Sydney landmark building will be used. The pixels in the overlap region will be blended across the overlap region, that is, the images will be faded to black. The general approach works equally well for any number of images and also for images that may not be aligned in a rectangular fashion. The projectors being used here are XGA (1024x768) and the overlap region is 256 pixels. The overlap need not strictly be a power of 2 but since the blending is performed using textures and OpenGL a power of 2 is the most efficient. The degree of overlap is dictated by the amount of gamma correction required (see later) and the dynamic range of the blend function. For this exercise 128 pixels couldn't achieve as good as results as the 256 pixel overlap. The resulting image width is then 2 x 1024 - 256 = 1792 pixels, the height is 768 pixels. The image below shows the two projected images, as expected the overlap region is brighter than it should be because it is being contributed to by two projectors. The application being used to present this panoramic is a locally developed previewer for stereoscopic panoramic images written for OpenGL based graphics. Creating the two overlapped images using OpenGL is particularly simple as it only requires the appropriate change in the view frustum. Please note that the images presented here are digital photographs taken of the images in a darkened room, there are some challenges involved with that which means the colours seem a bit washed out.

Blending

Blending involves multiplying each pixel in the overlap region of one image by an amount such that when it is added to its corresponding pixel in the other image, the result is the intended pixel value. This is readily achieved by any function f(x) whose value lies between 0 and 1, the pixel from the right image say is multiplied by f(x) and the corresponding pixel from the left image multiplied by 1-f(x). The function used here is given below, there are lots of possible choices but this one was chosen because it allowed experimentation of the exact form of the function from linear to highly curved.

Graphically the function looks as follows, note the coordinates are normalised to 0 (refers to the start of the edge blend region) and 1 (the end of the edge blend region). So consider the right image, a pixel in the first column is multiplied by 0 (it has no effect) a pixel on the right edge of the blend region (column 255) is multiplied by 1. The blend function for the left hand image is 1 - the curve below. In the center of the blend region the curve passes through 0.5 which means each image pixel contributes the same amount to the final pixel value. The exact curvature is controlled by the parameter "p", the blending is linear for p=1, the transition around 0.5 becomes steeper as p increases. p=1 tends to result in a visible step at the edges of the blending region, p=2 is used for all examples in this document.

The edge blend is accomplished by placing a polygon with the edge blend function above represented as a grey scale 1 dimensional texture. This textured polygon is blended (OpenGL speak) appropriately so it scales the pixels in the image buffer. The nice thing about this method is that it can be trivially applied to any OpenGL application since it is a post processing stage applied after the normal geometry drawing is performed. The following image shows the mask region texture across the blend region.

The image below is a screen dump of what this looks like on the computer (which has a dual display), that is, the panoramic images multiplied by the mask above.

The resulting projected image is given below, why is there a grey band?

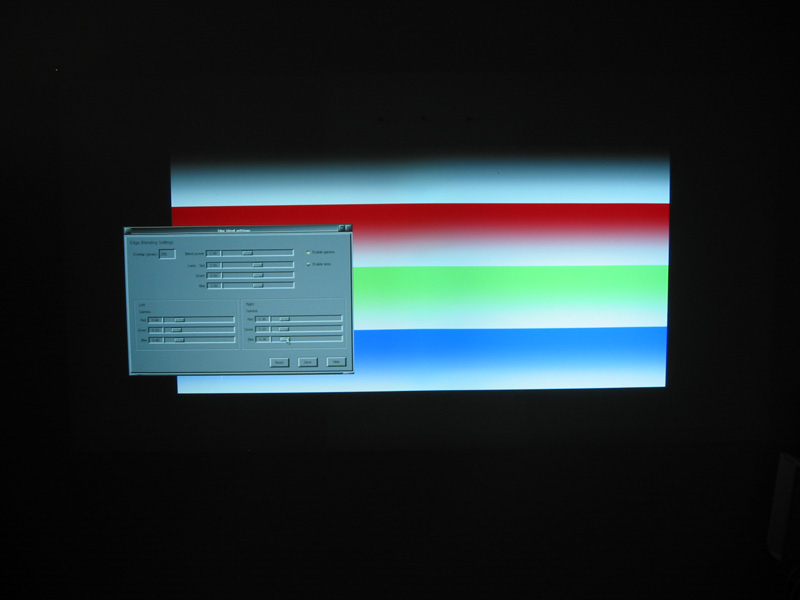

Gamma correction

The reason the blend function above isn't enough and why there is a grey band in the previous image is that the technique described so far is adding pixel values. What really needs to be done is to add brightness levels. The main function that controls how pixels are mapped to brightness is the so called gamma function of the display, typically between 1.8 and 2.2. Gamma is how pixel values are related to output brightness, if G is the gamma function then the output brightness is the pixel value (normalised to 0 and 1) raised to the power of G.

Fortunately, this is readily corrected by applying an inverse gamma power. The total transformation of the image pixels is then the function f(x)1/G and f(1-x)1/G.

The image below shows the gamma corrected edge blended image, note that in general the gamma correction needs to be applied to each r,g,b value because of variations in the projectors used.

Further refinements A modification to the edge blend function that gives some luminance control in the center of the blend region is as follows. "a" ranges from 0 to 1, if it is greater than 0.5 then the center of blend region will brighten, if it is less than 0.5 then it will become darker.

Projector black level A major limiting factor to how well the blending can be made invisible is the degree to which the projector can create black. Typically CRT projectors have total black however these are undesirable for other reasons (bulk, calibration, low light levels). LCD projectors typically have very poor black levels. DLP projectors are better (DLP was used for these tests). The key indicator published by the manufacturer regarding black level is the contrast, at the time of writing there are a number of projectors rated around the 1500:1 and 2000:1. Some projectors are rated at 3000:1 or even 3500:1. Generally there is a trade-off between projector brightness and contrast, most customers want bright projectors and this comes at the cost of poorer black levels.

References

Ruigang Yang, David Gotz, Justin Hensley, Herman Towles, Michael S. Brown,

R. Raskar, G. Welch, H. Fuchs

F. Schoffel, W. Kresse, S. Muller, and M. Unbescheiden,

W. Kresse, F. Schoffel, and S. Muller,

Ramesh Raskar, Jeroen van Baar, Jin Xiang Chai,

Aditi Majumder Zhu He Herman Towles GregWelch,

Aditi Majumder David Jones, Matthew McCrory, Michael E Papka, Rick Stevens,

Aditi Majumder, Rick Stevens,

M. Herald, I.R. Judson, J Paris, R.L. Stevens

Mark Hereld, Ivan R. Judson, Rick L. Stevens, Further examples/images

Work flow modifying a single wide image into an edge blended pair Image from "Earth's Wild Ride" produced by the Houston Museum of Natural Science and Rice University.

The above makes an important assumption, that is, the surface has Lambertian reflection properties. Lambertian means that light is equally scattered in all directions. Without this, the reflected light reaching the observer varies depending on their position with respect to the edge blend zone and the projector positions. So in the extreme case of a highly reflective surface a successful edge blend can only be accomplished if the observer position is tracked and the correct edge blend computed dynamically. Blending across left/right edge of a 360 degree panoramic imageWritten by Paul BourkeMarch 2006 See also: Edge blending

Even with high end panoramic capture devices such as the Roundshot cameras, it is common for there to be an intensity variation between the left and right ends of the panoramic. This can arise due to a variety of reasons including but not limited to lighting changes, automatic adjustments by the camera, and variations in the scanning process (for high resolution film based capture). The following illustrates the straightforward and relatively simple approach to correct for this, the result being a imperceptible difference across the boundary. Note that this is essentially the same as edge blending for projected images except that it is less sensitive to the exact form of the blend function and gamma correction isn't necessary. Original image

The following is an example used to illustrate the technique, note that it is necessary to have an overlap at either end, these are the regions that are blended together to form the final panoramic image in those regions.

Wrapping error across 0-360 degree boundary (left/right edge)

If a strip from the left hand edge of the image above is placed next to the corresponding position from a strip on the right, the discontinuity is clear. (Note changes in brightness and changes in shadows). If used in a virtual environment this discontinuity would be an objectionable artefact.

Blending mask (multiplicative)

The solution is to create a weighted sum of pixel values from the two overlap regions. The following shows the weighting function, in this example a linear ramp is used.

Mask applied to panoramic image

The following shows the mask applied to the input image. The weighting value W applied (multiplicative) to a pixel on one end is applied as (1-W) to the corresponding pixel at the other end of the panoramic image.

Final panoramic image

This shows the final image now less the overlap region, this is now the desired cylindrical panoramic image.

Resulting continuity across the 0-360 degree boundary

The same procedure as figure 2, shows no perceptible discontinuity.

|