Computer Generated

Written by Paul Bourke | |||||||||||||||||||||||||||||||||

Figure 1

|

Figure 2

|

Figure 3

|

Figure 4

|

Figure 5

|

Figure 6

|

Figure 7

|

Figure 8

|

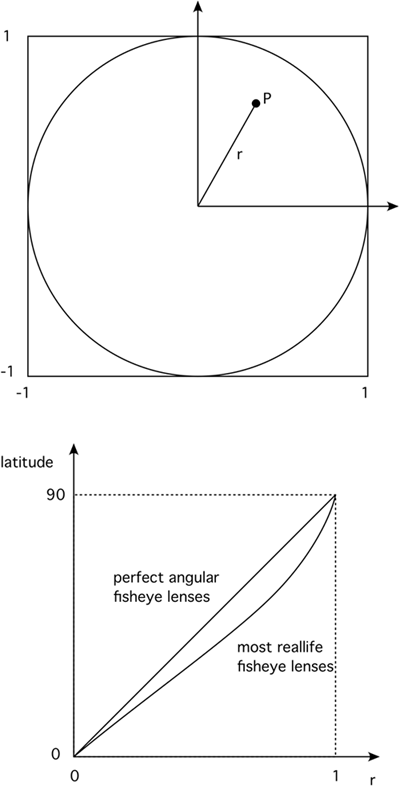

Real fisheye lenses rarely follow the precise linear relationship between radius on the fisheye image plane and latitude. The most common form of deviation is a compression of the image towards the rim of the fisheye circle. Of course if this non-linearity is a problem it can be corrected for, at the expense of some loss of resolution at the rim.

Appendix: Approximations

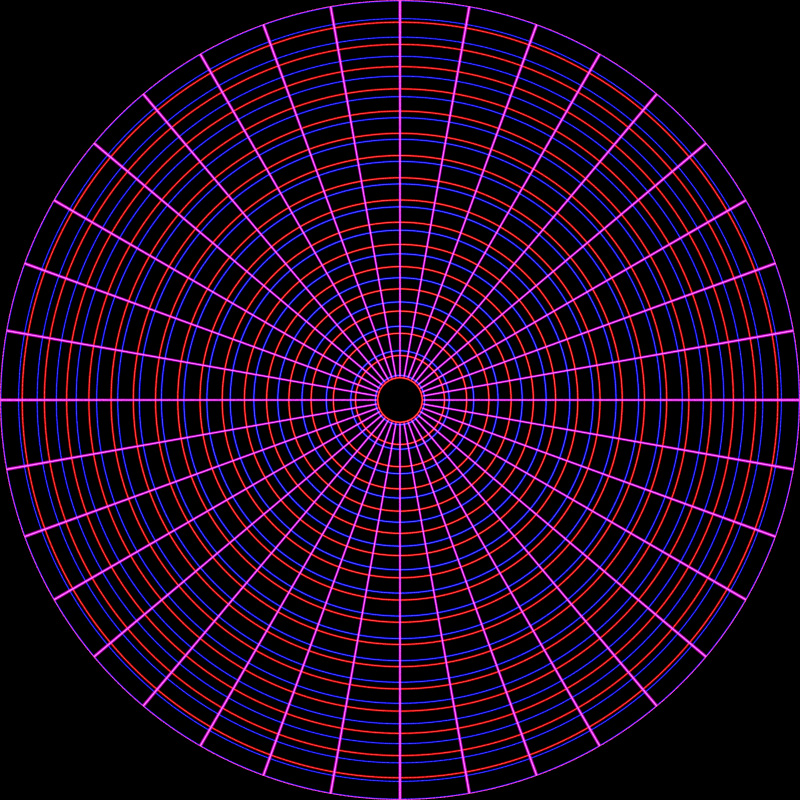

Some raytracing packages don't support a native fisheye camera, the question is often asked "can a spherical mirror be used to create a fisheye view?". The basic idea is to render the view of the scene by pointing the virtual camera at a perfectly reflective spherical surface. The answer is "the result is close but not exactly correct". Three rendering are shown below, the scene is simply a hemisphere represented as a regular grid of lines of latitude and longitude.

Overlap of fisheye and orthographic mirror

The following is the perfect fisheye superimposed with the orthographic rendering. The biggest difference is around the mid latitudes, for example, if such a projection was used in a planetarium then objects that remain at the same distance from the camera but move from the pole to the horizon will appear to change size.

It should be noted that a mirror (not spherical) can be designed such the correct result is achieved. In the authors opinion it is vastly better to render cubic maps from which correct fisheye projections can be derived.

Offaxis fisheye projection

Written by Paul BourkeOctober 2001, modified January 2004

There exist a number of full dome environments that project angular fisheye or other radial functions. If the angular fisheye is created correctly then the resulting image after projection onto the dome surface appears undistorted. Unfortunately if the projector uses a single fisheye lens then the standard fisheye projection as described here requires that both the lens and the viewer are located at the center of the dome, obviously impossible. As the viewer moves away from the center the image appears increasingly distorted. This isn't normally a problem for large planetariums where the radius of the dome is very much larger than the seating area and in any case nothing can be done since there are multiple viewers. For smaller domes the effect is more marked and the viewer can easily move significant distances from the center. It is possible to create a modified fisheye image so that if viewed from a particular position the projected image will appear undistorted, this is called an off axis fisheye image. This is the same principle employed when creating stereo pairs where one uses an off-axis (asymmetric) perspective frustum for each eye.

Algorithm

|

Creating an off-axis fisheye is quite straightforward. First, create the fisheye projection world vector p as described here. The vector p' is derived as shown in the diagram on the right. The new ray p' is the ray p minus the vector to the view position. While the diagram on the right shows the view position along the y axis, it is normally applied to any point in the x,y plane as the dome viewer will most commonly move around on that plane. Note that as the viewer moves towards the rim the image gets increasingly stretched in that direction. This violates the usual characteristic of angular fisheye images where a pixel in image space is proportional to the angle in fisheye space. Or put another way, all pixels in the fisheye image are the same dimensions in the projected image, this can be seen in the example below.

|

|

A common approach to creating content for dome environments is to render standard projections onto the faces of a cube. The off-axis fisheye can be computed from these at interactive rates and so a single viewer with a head tracking device can be presented with a corrected image as they walk around the base of the dome.

Test Pattern

The following test pattern was used to test the correctness of the off-axis fisheye. It is a rendering of a cubic room, each wall is a different colour and constructed with a regular grid of bars. If the fisheye generation is correct the curved lines in the fisheye image should appear straight when projected onto the dome. The coordinate above each image is the position of the viewer.

Offset = (0,0) |

Offset = (0,0.5) |

Offset = (0,0.75) |

Offset = (0,0.95) |

Offset = (0.5,0.5) |

Offset = (0.7,0.7) |

This example is rendered in PovRay onto a 5 wall cubic environment map, the off-axis fisheye is created from these 5 images. (5view.ini, 5view.pov, 5viewcamera.pov) This has the advantage of being able to create multiple offaxis fisheye images from one set of cubic environment maps, this would not be possible if the offaxis fisheye was rendered directly in PovRay (something that is easy to arrange by modifying the PovRay source code).

|

|||

|

|

|

|

Creating offaxis projections from existing fisheye images

Given an existing fisheye image, an offaxis fisheye can be created for any offaxis position. There is a full mapping for 180 degree fisheye images, smaller angle images result in an incomplete mapping. It reads a TGA image representing a fisheye image and creates an offaxis fisheye as another TGA file. Note that there are important resolution issues to consider, this works best when going from very high resolution fisheye images to lower resolution ones. The code here is a simple command line based UNIX utility, it supports supersampling antialiasing and variable output image size.

offaxis tgafilename [options] Options: -a n set antialias level (Default: 1) -w n width of the output image (Default: 500) -h n height of the output image (Default: width) -dx n x component of the offaxis vector (Default: 0) -dy n y component of the offaxis vector (Default: 0) -v debug/verbose mode (Default: off)

Original fisheye image

|

X axis offset of 0.3

|

Y axis offset of 0.3

|

X and Y axis offset of 0.3

|

Photos of a Prototype Dome

Example Images used in the Prototype Dome

Note that for these tests an Elumens projector was used. This is a standard projector with their fisheye lens and as such the projector projects a 4:3 width to height ratio while fisheye images are 1:1. This is addressed by clipping off 25% of the image, in the case of the Elumens dome this is normally the bottom 25%. In our case we chose to clip the top 25%, this conveniently gave the viewers a position behind the projector from which to view the images without being blinded.

|

|

Addendum

The offaxis correction as described above only applied to shifting the

observer around on the rim plane of the hemisphere. A similar correction

can be made to compensate for the observer being within the dome, or in

the more likely case, away from the rim of the dome (along the negative z axis,

see figure 1). This correction is

applied in exactly the same way but note that it results in a reduced

field of view unlike offset positions on the rim plane which result in

stretching distortion but the same field of view.