Repeatability of reconstructed modelsReport by Paul BourkeSeptember 2014

3D reconstruction from photographic sets is a rapidly growing technology for the capture of 3D models in archaeology, heritage, geology, to mention just a few application areas. The algorithms are still under development as is the quality of cameras and the understanding (experience) of how to take optimally positioned photographs. As a result the reconstructed quality is improving. An obvious question that arises is around accuracy, often though what is meant by accuracy is not well defined and indeed the definition may vary depending on the goals of the discipline. For example, for geographical purposes accuracy may focus on the position of the reconstructed surface or object on the Earths surface and being able to perform accurate measurements in the plane, whereas when capturing an artefact the orientation and global scale may be largely unimportant, what is required is a high quality digital representation of the form of the object in question. The challenge to quantifying accuracy is one of not having a digital ground truth. While one approach taken is to use multiple digitisation modalities, they all have inherent errors. Laser scanning is often proposed as the highest accurate method but there are still errors involved especially when multiple scans need to be combined for objects for which multiple laser scanner locations are required. Without a ground truth model how does one know if any errors are in one form of capture or the other. The analysis in this document was an attempt to capture a heritage object, fix its size in one dimension from a known measurement and then verify that the dimensions of other features were correct One way of getting a handle on the accuracy is to test the reproducibility of multiple reconstructions. That is, reconstruct an object multiple times using a different set of photographs. The remainder of this document presents the outcome of this investigation, this is not in itself intended to be a comprehensive test but a methodology for others to follow to evaluate this type of error for their own reconstruction practice. The reconstruction software used for this exercise is "PhotoScan" and the point cloud comparison software is "CloudCompare". 9 sets of 3 (27 photographs in all) were taken of a small statue.

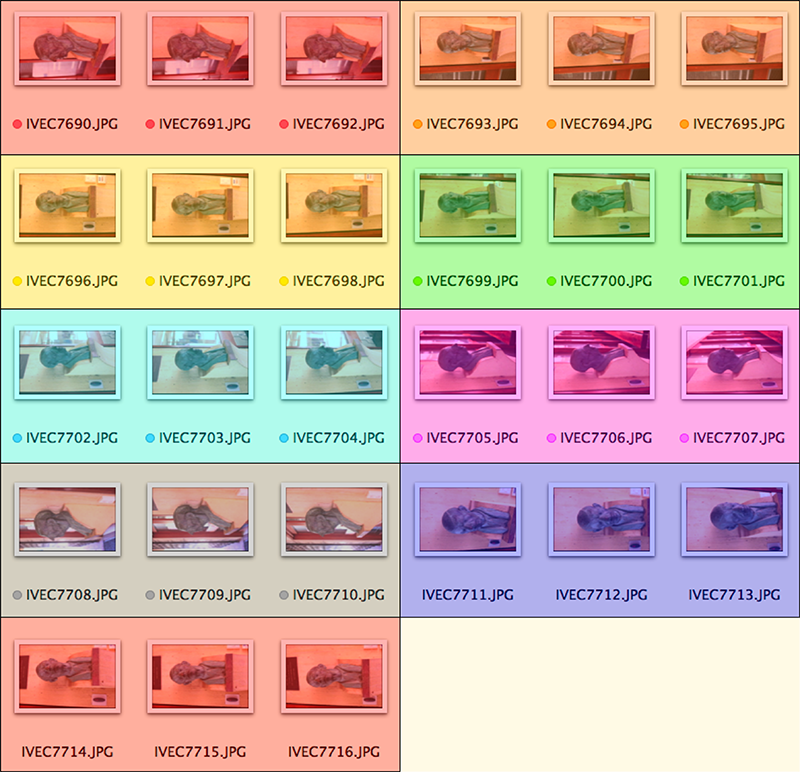

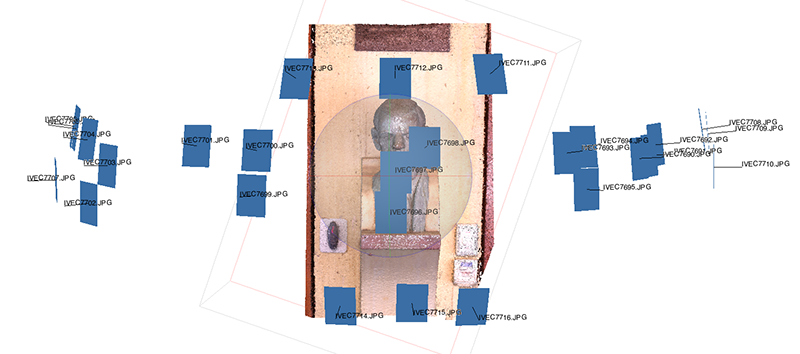

The image below shows the derived camera positions and illustrates the photographic strategy, each of the camera positions was slightly offset 3 times. This allows a large number of reconstructions to be created where one photograph is selected from each of the 9 positions. While this is not a random photographic set nor is a larger photographic sampled randomly, the justification for this is that one engaging is photography for reconstructions does need to have an appreciation for the optimal way to photograph. The 9 positions shown are an appropriate set of positions for this object so variations has only been chosen at those positions.

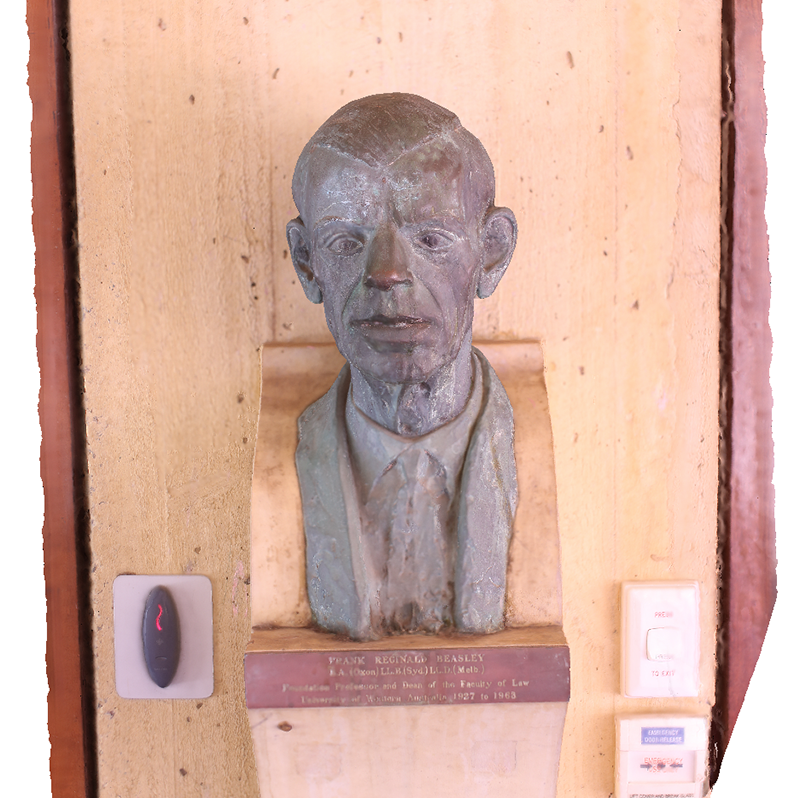

The reconstructed models from each photographed position are processed identically and the total triangle count limited to 1 million. The reconstruction using all of the 27 photographs is shown below.

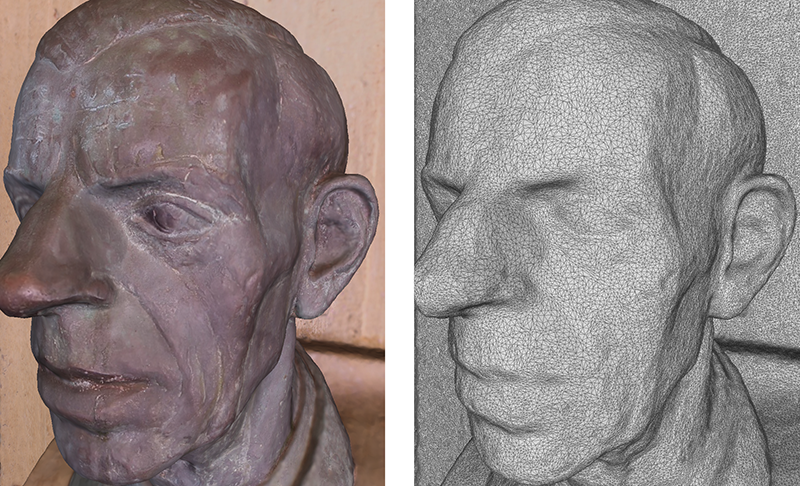

Further example of a zoomed in portion of the model reconstructed from all 27 photographs.

The point cloud and camera positions from one of the many possible sets of 9 photographs is shown below.

And the zoomed in portion of the resulting reconstructed model (from just 9 photographs) similar to the zoomed view shown above for the full photograph set.

The comparison for repeatability involves comparing the models derived from the many possible 9 photograph sets with the model derived from the 27 photographs. That is not to suggest the 27 photograph model is necessarily better, but one model needs to be chosen for the basis of the subsequent comparisons. A refinement might be to compare all models to all models to find the pair of maximum deviation. Since the various reconstructions, while generally the same scale, were randomly orientated, four matching points were selected on every pair of images and the two images aligned such that those 4 points are coincident. Note that the alignment involves translation, rotation, and a single uniform scale (a single scale factor for all three axes). This last point is important because one is interested in potentially differential scaling errors along the different axes.

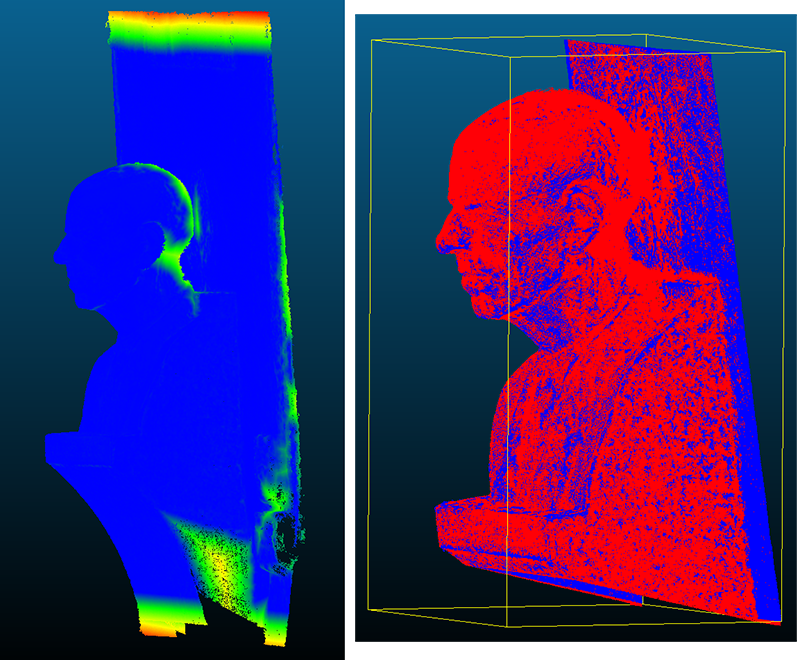

As expected the largest variations between a representative reconstruction from 9 photographs and the reference from 27 photographs is towards the border of the reconstructed model. Noting that slightly different photograph coverage resulted in slightly different regions being reconstructed. Image on left illustrates variation between the meshes (colour scale biased by the extreme boundary points). On the right one mesh is drawn red and the other blue showing the ripple error particularly on the flat surfaces.

Four of the many possible reconstructions are shown below. The gaps behind the head, which don't appear in the reconstruction from the full 27 photographs are simply due to lower photographic coverage in those regions. The visual similarity between these point clouds is clear.

The evaluation here is quantitative and incomplete but encouraging. The variation between multiple reconstructions was minimal, less than 2mm across the head portion of this model which has an overall dimension of about 300mm. Over photographing a model for reconstruction might also allow one to do multiple reconstructions and use the average point cloud for the final meshing. |